Introduction

tf-bindgenis early in development. Expect breaking changes.

Before we start using tf-bindgen, we will explain a few concepts and ideas. We recommend basic knowledge about Terraform and Infrastructure as Code (IaC). If you have no experience with Terraform, we also recommend reading up on the basic and some examples of Terraform HCL.

You can follow this link to start with an introduction provided by HashiCorp.

What is tf-bindgen?

In 2022, HashiCorp released a tool called CDK for Terraform (CDKTF) to the public. It allows generating your IaC deployments using high-level languages, like Typescript, Python and Java, instead of relying on HashiCorp's declarative language HCL. That said, it does not come with support for Rust.

At this point, tf-bindgen comes into play. It will generate similar Rust bindings like CDKTF for Java, relying on heavy use of the builder pattern. On the downside, it does not use JSII like CDKTF, so it will not integrate with CDKTF but coexists alongside it.

How does tf-bindgen work?

Similar to CDKTF, tf-bindgen is using the provider schema provided by Terraform to generate bindings with a similar structure like HCL. Then these structures will be used to generate the Terraform configuration using JSON files. Terraform can use these files to plan and deploy your infrastructure. Simplified: tf-bindgen is like CDKTF, a glorified JSON generator for Terraform IaC.

Getting Started

In this section, we will create a simple deployment of a Postgres database server. The resulting source code will be equivalent to the following Terraform HCL:

trraform {

requried_providers {

docker = {

source = "kreuzwerker/docker"

version = "3.0.2"

}

}

}

provider "docker" {}

resource "docker_image" "postgres" {

name = "postgres:latest"

}

resource "docker_container" "postgres" {

image = docker_image.postgres.image_id

name = "postgres"

env = [

"POSTGRES_PASSWORD=example"

]

}

Before we start, we need to prepare our setup by adding the required dependencies:

cargo add tf-bindgen

cargo add tf-docker

For this section, we will not deal with generating our own bindings, but use some existing ones. If you are interested in this topic, you can read about it in Generating Rust Bindings.

Configure a Provider

We will start by setting up a stack to store our resources. Unlike in CDKTF, it is not necessary to create an App.

use tf_bindgen::Stack;

let stack = Stack::new("postgres");

After, we will configure our Docker provider. Because we use the default configuration, we do not have to call any setter and can create our provider immediately.

use tf_docker::Docker;

Docker::create(&stack).build();

Note that the provider will only be configured for the given stack.

Create a Resource

After we configured our provider, we can use the resources and data sources provided by our bindings. In our case, we only use the docker_image and docker_container resource. We can import these to our deployment:

use tf_docker::resource::docker_image::*;

use tf_docker::resource::docker_container::*;

Equivalent to the code above: We can find correspond data sources under tf_docker::data::<data source name>.

We can use the imported configuration now, to create our docker image:

let image = DockerImage::create(&stack, "postgres-image")

.name("postgres:latest")

.build();

Using this snippet, we will create a new docker image to our stack. Important is that we have to specifiy an object id, in our case "postgres-image", in addition to the associated stack. This ID is expected to be unique to the used scope, in this case the stack.

Referencing an Attribute

In the next step, we will create our postgres container. To do that, we can use a similar builder exposed by tf-docker:

DockerContainer::create(&stack, "postgres-container")

.name("postgres")

.image(&image.image_id)

.env(["POSTGRES_PASSWORD=example"])

.build();

To use the Docker image ID generate by "postgres-image", we have to reference the corresponding field in our image resource (similar to the HCL example).

If you play around with your Docker image configuration a bit, you may notice that you can not set the Image ID. This is because, image_id is a computed/read-only field exposed by Docker image resource (see docker_image reference).

Complex Resources

While creating a Docker image and container is straight forward, it may become a bit harder than using heavily nested resources (e.g. Kubernetes resources). In this section, we will create a Kubernetes pod with a NGINX container running inside. It will be equivalent to the following HCL code:

terraform {

required_providers = {

kubernetes = {

source = "kubernetes"

version = "3.0.2"

}

}

}

provider "kubernetes" {

config_path = "~/.kube/config"

}

resource "kubernetes_pod" "nginx" {

metadata {

name = "nginx"

}

spec {

container {

name = "nginx"

image = "nginx"

port {

container_port = 80

}

}

}

}

Similar to the docker example in the previous section, we need to add our dependencies:

cargo add tf-bindgen

cargo add tf-kubernetes

In this case, we will use the Kubernetes bindings instead of the Docker bindings. We also will start similar to the docker example by setting up our stack and Kubernetes provider:

use tf_bindgen::Stack;

use tf_kubernetes::Kubernetes;

use tf_kubernetes::resource::kubernetes_pod::*;

let stack = Stack::new("postgres");

Kubernetes::create(&stack)

.config_path("~/.kube/config")

.build();

Create a Complex Resource

You have already seen how to set attributes of Terraform resources. We will use the same setters, like config_path, to set our nested structures. Important is that we have to create these nested structures, before we can pass them to the setter.

let metadata = KubernetesPodMetadata::builder()

.name("nginx")

.build();

As this snippet shows, the exposed builder will not need a scope or an ID to create a nested type. In addition, we will not use create but rather builder to create our builder object.

We can repeat this for our nested type spec :

let port = KubernetesPodSpecContainerPort::builder()

.container_port(80)

.build();

let container = KubernetesPodSpecContainer::builder()

.name("nginx")

.image("nginx")

.port(port)

.build();

let spec = KubernetesPodSpec::builder()

.container(vec![container])

.build();

You can notice a few things:

- Nested types of nested types will be created using the same builder pattern,

- they can be passed using the setter, and

- we can pass multiple nested types by using an array (in our case using

vec!).

To finalize it, we can use our created metadata and spec object and pass it to our Kubernetes pod resource:

KubernetesPod::create(&stack, "nginx")

.metadata(metadata)

.spec(spec)

.build();

Using tf_bindgen::codegen::resource

While using builder is a nice way to set these attributes and allows very flexible code, using multiple builders in a row result in higher complexity than using HCL. That is the reason we implemented tf_bindgen::codegen::resource macro. It allows using a simplified HCL syntax to create resources. Our Kubernetes pod resource using this macro would look like:

tf_bindgen::codegen::resource! {

&stack,

resource "kubernetes_pod" "nginx" {

metadata {

name = "nginx"

}

spec {

container {

name = "nginx"

image = "nginx"

port {

container_port = 80

}

}

}

}

}

This macro use the same builders as shown in the section before and will return the resulting resource.

Constructs

Having a way to modularize our deployment code can decrease code complexity and reduce code duplication. tf-bindgen will utilize constructs similar to Terraform CDK Constructs and will use the Construct derive macro to implement the required traits.

In this section, we will create a construct to deploy a nginx pod to Kubernetes. To create our construct, we will start with creating a struct and adding the Construct derive macro:

use tf_bindgen::codegen::Construct;

use tf_bindgen::Scope;

#[derive(Construct)]

pub struct Nginx {

#[construct(id)]

name: String,

#[construct(scope)]

scope: Rc<dyn Scope>

}

In addition, we added two fields to our struct: name and scope. Both fields are necessary and we have to add the #[construct(id)] and #[construct(scope)] annotation to these fields.

Using this declaration, our derive macro will do nothing more than implementing the Scope trait for our Nginx struct. This trait is necessary to use this struct instead of stack in resources and data sources.

Generating a Builder

In most cases, it will be necessary to pass extra information to our construct. So we need a way to add and set parameters to our construct. We can use the builder option of the Construct derive macro by adding #[construct(builder)] annotation to our struct. In addition, we will add to fields namespace and image to our struct:

use tf_bindgen::codegen::{Construct, resource};

use tf_bindgen::Scope;

#[derive(Construct)]

#[construct(builder)]

pub struct Nginx {

#[construct(id)]

name: String,

#[construct(scope)]

scope: Rc<dyn Scope>,

#[construct(setter(into_value))]

namespace: Value<String>,

#[construct(setter(into_value))]

image: Value<String>,

}

This code snippet allows creating an Nginx construct using the following builder:

Nginx::create(scope, "<name>")

.namespace("default")

.image("nginx")

.build();

Note that we have to implement the build function ourselves. But before we will implement this function, we must consider different setter options:

#[construct(setter)]or no annotation: You can this to generate setters taking the same type as the field as input.#[construct(setter(into))]This annotation is used to generate setters takingInto<T>where T is the type of the field as an argument.#[construct(setter(into_value))]This annotation is used to generate setters takingIntoValue<T>where T is the type of the field as an argument. In addition, this field must be of typeValue<T>.#[construct(setter(into_value_list))]This annotation is used to generate setters taking objects implementingIntoValueList<T>as an argument. In addition, this field must be of typeVec<Value<T>>.#[construct(setter(into_value_set))]This annotation is used to generate setters taking objects implementingIntoValueSet<T>as an argument. In addition, this field must be of typeHashSet<Value<T>>.#[construct(setter(into_value_map)))]This annotation is used to generate setters taking objects implementingIntoValueMap<T>as an argument. In addition, this field must be of typeHashMap<String, Value<T>>.

In general, it is recommended to use Value wrapped types to ensure better compatibility with tf-bindgen. It also allows using references as a Value.

Creating Resources

Finally, we want to create our resources. We will create the already mentioned build function for that. It is important to note, that we will not implement the function for Nginx but rather for NginxBuilder, a type generated by our Construct derive macro.

To implement our build function, we will start with creating our construct type. For that we will need to clone our name and scope field. Because every other field will be wrapped inside an Option-type, we will need to clone and unwrap them (in our case, we will use expect instead). In addition, it is essential to wrap our type inside a reference counter Rc, because it is required to use a construct as a scope.

After we created our construct, we can use it to create our resources. The following example, will show an implementation for a nginx container inside a Kubernetes pod:

impl NginxBuilder {

pub fn build(&mut self) -> Rc<Postgres> {

let this = Rc::new(Postgres {

name: self.name.clone(),

scope: self.scope.clone(),

namespace: self.namespace.clone().expect("missing field 'namespace'"),

image: self.image.clone().expect("missing field 'image'")

});

let name = &this.name;

tf_bindgen::codegen::resource! {

&this, resource "kubernetes_pod" "nginx" {

metadata {

namespace = &this.namespace

name = format!("nginx-{name}")

}

spec {

container {

name = "nginx"

image = &this.image

port {

container_port = 80

}

}

}

}

};

this

}

}

Outputs

TODO

Naming Conventions

For Generated Structures

- Type names of nested types will be created by concatenate parent type names to the nested type name and convert them to camel case (e.g. the type name of

containerinsideKubernetesPodSpecwill beKubernetesPodSpecContainer; see section Complex Resources). - Attributes will be named identically in HCL and

tf-bindgenso you can stick to the documentation of Terraform (see Terraform Registry). - In case an Attribute is called

buildit will be renamed tobuild_to avoid conflicts with thebuildfunction of the builder.

List of Existing Bindings

The following table contains a list of crates found at crates.io, a list of the corresponding Terraform provider.

| Crate | Terraform Provider | Provider Version |

|---|---|---|

tf-kubernetes  | kubernetes | 2.19.0 |

tf-docker  | docker | 3.0.2 |

Examples

This section contains a list of repositories using tf-bindgen to deploy infrastructure:

- Git Server Constructs: a collection of constructs used to deploy a Git server based on Gitea to Kubernetes.

Generate Rust Bindings

In this section, we will go over the things you need to do to set up custom generated bindings. We recommend using an empty library crate for doing so.

Adding tf-bindgen

We will start by adding tf-bindgen to our Project. Because it is utilized by both our build script and the generated code, we need to add it twice. You can use the following command to add the latest version from the repository:

cargo add -p "docker" \

--git "https://github.com/robert-oleynik/tf-bindgen.git" \

"tf-bindgen"

cargo add --build -p "docker" \

--git "https://github.com/robert-oleynik/tf-bindgen.git" \

"tf-bindgen"

Setup Build Script

As already mentioned, we will leverage Cargo's support for build scripts to generate the bindings for our provider. Thereto, we will create a new build.rs in our crate:

// build.rs

use std::path::PathBuf;

fn main() {

println!("cargo:rerun-if-changed=terraform.toml");

let bindings = tf_bindgen::Builder::default()

.config("terraform.toml")

.generate()

.unwrap();

let out_dir = PathBuf::from(std::env::var("OUT_DIR").unwrap());

bindings.write_to_file(out_dir, "terraform.rs").unwrap();

}

This script will read the provider specified in the terraform.toml file. In addition, it will parse the provider information and generate the corresponding Rust structs for it. The resulting bindings will be stored in the terraform.rs inside our build directory.

As you may have noticed, we did not create a terraform.toml yet. We will use this file to specify the providers we want to generate bindings for. A provider can be specified by adding <provider name> = "<provider version>" to the [provider] section of this TOML document. We will utilize the same version format as used by Cargo (see Specifying Dependencies). In the example below, we will use the docker provider locked to version 3.0.2:

# terraform.toml

[provider]

"kreuzwerker/docker" = "=3.0.2"

Setup Module

Now we have generated our bindings, but we did not import them yet. To achieve that, we need to include the generated terraform.rs file into our crate.

// src/lib.rs

include!(concat!(env!("OUT_DIR"), "/terraform.rs"));

tf-bindgen will declare a module for each provider specified. So if you only declared a single provider, you may want to re-export these bindings to the current scope (e.g. pub use docker::*; in case of the docker provider).

Improving Compile Duration

To measure the compile duration in cargo, we will run cargo with the timings flag:

cargo build --timings

This will give some more details on what package takes how long. In addition, we will use the following commands to set up our project:

cargo new --bin test-compile-duration

cd test-compile-duration

cargo add --git https://github.com/robert-oleynik/tf-bindgen tf-bindgen

cargo add --git https://github.com/robert-oleynik/tf-kubernetes

We will use the tf-kubernetes provider because it is known for having long compile times. To finalize our preparations, we will add the following source code to our src/main.rs:

use tf_kubernetes::kubernetes::Kubernetes;

use tf_bindgen::Stack;

fn main() {

let stack = Stack::new("compile-time");

Kubernetes::create(&stack).build();

}

To start our tests, we will run cargo build --timings to receive an initial measurement for our repository. The following tables contain the result of this run:

| Unit | Total | Codegen |

|---|---|---|

| tf-kubernetes v0.1.0 | 160.4s | 110.3s (69%) |

| test-compile-time v0.1.0 bin "test-compile-time" | 24.7s | |

| tf-kubernetes v0.1.0 build script (run) | 5.4s | |

| Total | 206.3s |

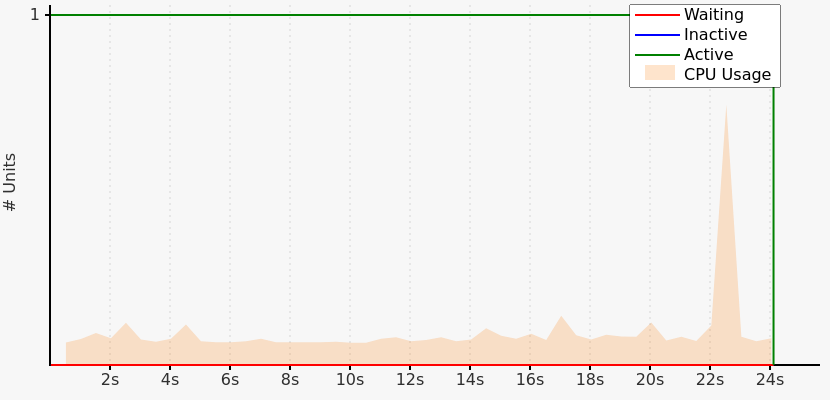

Although tf-kubernetes has the longest compile time, we will focus on test-compile-time, because of incremental builds we only have to compile tf-kubernetes once. The following diagram contains the CPU usage for only the compilation test-compile-time:

Because our program is simple and short, we know most of this time is due to linking our executable. In addition, we can see that our linker is only using a single CPU core most of the time.

Using mold as a linker

As stated on their GitHub page, “mold is a faster drop-in replacement for existing Unix linkers. […] mold aims to enhance developer productivity by minimizing build, particularly in rapid debug-edit-rebuild cycles”. This matches our needs, so we will set mold as our linker in this section.

We will start with replacing our current linker with mold by adding .cargo/config.toml to our project:

# .cargo/config.toml

[target.x86_64-unknown-linux-gnu]

linker = "clang"

rustflags = ["-C", "link-arg=-fuse-ld=/usr/bin/mold"]

Now we can rerun our test using the following commands:

cargo clean # ensure same test conditions

cargo build --timings

While our total compile only drops by about 12%, the duration of building test-compile-time will be reduced by about 84%.

| Unit | Total | Codegen |

|---|---|---|

| tf-kubernetes v0.1.0 | 159.9s | 111.0s (69%) |

| test-compile-time v0.1.0 bin "test-compile-time" | 3.9s | |

| tf-kubernetes v0.1.0 build script (run) | 2.9s | |

| Total | 180.37s |

Using LLVM lld as a linker

While using mold gives you impressive performance, LLVM lld can reach the same level of in our case.

You can enable lld as your linker by setting your .cargo/config.toml to the following code:

# .cargo/config.toml

[target.x86_64-unknown-linux-gnu]

linker = "clang"

We will run the same commands as used with mold:

cargo clean # ensure same test conditions

cargo build --timings

The results show that we can see the same improvement in compile duration as with mold:

| Unit | Total | Codegen |

|---|---|---|

| tf-kubernetes v0.1.0 | 160.4s | 111.3s (69%) |

| test-compile-time v0.1.0 bin "test-compile-time" | 4.0s | |

| tf-kubernetes v0.1.0 build script (run) | 2.9s | |

| Total | 180.7s |

Structure of tf-bindgen

tf-bindgen is a collection of multiple smaller crates building all the necessary features:

tf-coreUsed to implement basic traits and structs required by the generated code and used by the other crates (e.g.StackandScope).tf-codegenUsed to implement code generation tools to simplify usage oftf-bindgen.tf-schemaThe JSON schemas exposed by Terraform. Contains both JSON Provider Schema and JSON Configuration Schema.tf-cliUsed to implement Terraform CLI wrappers, which will take care of generating the JSON configuration and construction of the Terraform command.tf-bindingBundles the crates and implements the actual code generation.